Azure Kubernetes Service Auditing

The AKS assessment is a comprehensive list of factors you need to think about when preparing a cluster for production. It is based on all the popular best practices that have been agreed around Kubernetes.

DISASTER RECOVERY

- Ensure perform a greenfield deployment

- Create a storage migration plan

- Guaranty SLA 99,95%

CLUSTER SETUP

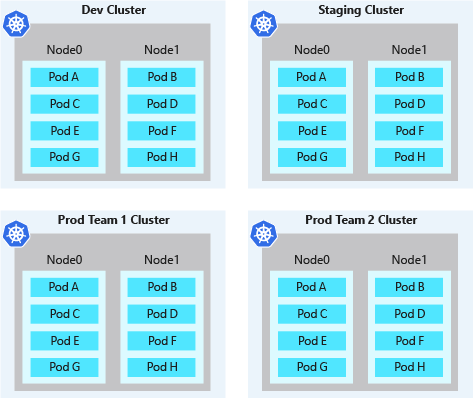

Logically/Physically isolate cluster

Minimize the use of physical isolation for each separate team or application deployment. Instead, use logical isolation

Logical separation of clusters usually provides a higher pod density than physically isolated clusters, with less excess compute capacity sitting idle in the cluster. When combined with the Kubernetes cluster autoscaler, you can scale the number of nodes up or down to meet demands. This best practice approach to autoscaling minimizes costs by running only the number of nodes required.

Logically isolate cluster

Physically isolate cluster

AAD Integration

Use System Node Pools

AKS Managed Identity. Azure Government isn’t currently supported

VM Sizing

K8S RBAC + AAD Integration

Private cluster

Enable cluster autoscaling

Sizing of the nodes

Refresh container when base image is updated

Use AKS and ACR integration without password

Use placement proximity group to improve performance

DEVELOPMENT

- Implement a proper Liveness probe

- Implement a proper Startup probe

- Implement a proper Readiness probe

- Implement a proper prestop hook

- Run more than one replica for Deployment

- Store secrets in Azure Key Vault, don’t inject passwords in Docker Images

- Implement Pod Identity

- Use Kubernetes namespaces to properly isolate Kubernetes resources

- Set up requests and limits on containers

- Specify the security context of pod/container

- Conduct Docker Image Builds using Docker Image Security

- Static Analysis of Docker Images on Build

- Threshold enforcement of Docker Image Builds that contain vulnerabilities

- Compliance enforcement of Docker Image Builds:

- Scan the container image against vulnerabilities

- Allow deploying containers only from known registries

- Runtime Security of Applications

- Role-Based Access Contol (RBAC) to Docker Registries

- Prefer distroless images

RESOURCE MANAGEMENT

- Enforce resource quotas

- Set memory limits and requests for all containers

- Configure pod disruption budgets

- Set up cluster auto-scaling

CLUSTER MAINTENANCE

- Maintain kubernetes version up to date

- Keep nodes up to date and patched

- Securely connect to nodes through a bastion host

- Regularly check for cluster issues

- Monitor the security of cluster with Azure Security Center

- Provision a log aggregation tool

- Enable master node logs

- Collect metrics

- Configure distributed tracing

- Control the compliance with Azure Policies

- Enable Azure Defender for Kubernetes

- Use Azure Key Vault

- Use GitOps

- Use with K8S Tools (Helm, K9s, Rancher)

- Don’t use the default namespace

SECURITY

- Don’t expose your load-balancer on Internet if not necessary

- Use Azure Firewall to secure and control all egress traffic going outside of the cluster

QUESTIONS

- How many total pod in application run with max expected CCUs?

- How many pod run per node?

- Should you use few large nodes or many small nodes in cluster?

- As always, there is no definite answer.

- The type of applications that you want to deploy to the cluster may guide the decision.

- For example, if application requires 10 GB of memory, probably shouldn’t use small nodes — the nodes in cluster should have at least 10 GB of memory.

- Or if application requires 10-fold replication for high-availability, then probably shouldn’t use just 2 nodes — cluster should have at least 10 nodes.

- For all the scenarios in-between it depends on specific requirements.

- Few large nodes vs Many small nodes

- Few large nodes

- Pros:

- Less management overhead

- Allows running resource-hungry applications

- Cons:

- Large number of pods per node

- Limited replication

- Higher blast radius

- Large scaling increments

- Pros:

- Many small nodes

- Pros:

- Reduced blast radius

- Allows high replication

- Cons:

- Large number of nodes

- More system overhead

- Lower resource utilisation

- Pod limits on small nodes

- Pros:

- Few large nodes